In the next article we will take a look at three tools to find and remove duplicate files in Ubuntu. You may find that your computer is full of duplicate files on more than one occasion. One day you discover that your hard drive is filled with multiple copies of the same files in different backup directories. The problem comes because it is common to forget to clean these files and the hard drive begins to accumulate too many duplicate files after a certain period of time.

This is why it is always good to know how find and delete duplicate files. To do this, we can use the tools detailed below in the Unix-like operating systems. You have to be careful when removing duplicate files. If you don't have it, it can lead to accidental data loss. Therefore, it is advisable to pay attention when using these tools.

Find and remove duplicate files in Ubuntu

For this task at hand, we will see three available tools; Rdfind, Fdupes, Fslint.

These three utilities are free, open source, and work on most Unix-like operating systems.

Find

Find is a utility of open source and free to find duplicate files in directories and subdirectories.

Compare the files based on their content, not their names archive. Rdfind uses the classification algorithm to differentiate between original and duplicate files. If it finds two or more files of the same, Rdfind is smart enough to find which is the original file. Once you find the duplicates, you will report them to us. We can decide to remove or replace them.

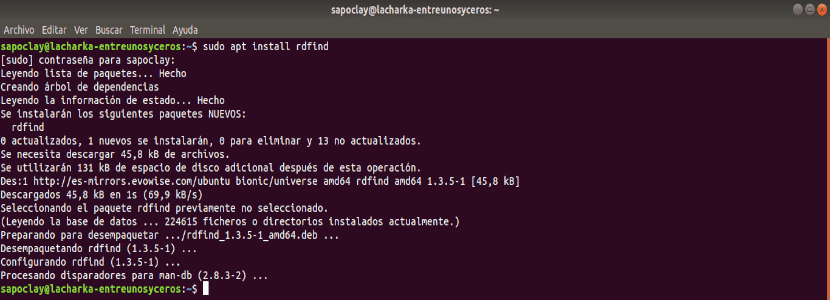

Rdfind installation

We open a terminal (Ctrl + Alt + T) and write:

sudo apt install rdfind

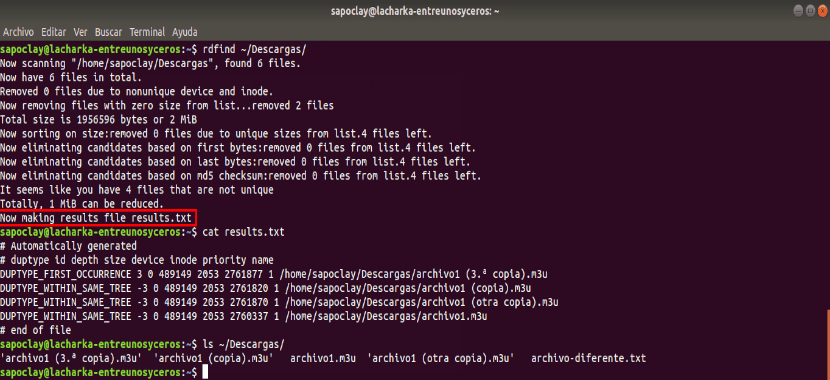

Use

Once installed, you just have to run the Rdfind command along with the path where we want to look for duplicate files.

rdfind ~/Descargas/

As you can see from the screenshot above, the Rdfind command will scan the directory ~ / Downloads. It will save the results to a file called results.txt, located in the current working directory. It can see the name of the possible duplicate files within the results.txt file.

You can get more information about all the possibilities it offers, through the help section or man pages:

rdfind --help man rdfind

fdupes

Fdupes is another command line utility for identify and remove duplicate files within specified directories and subdirectories. It is a free utility of open source written in C programming language.

Fdupes identifies duplicates comparing file sizes, partial MD5 signatures, full MD5 signatures and finally performing a byte-by-byte comparison for verification.

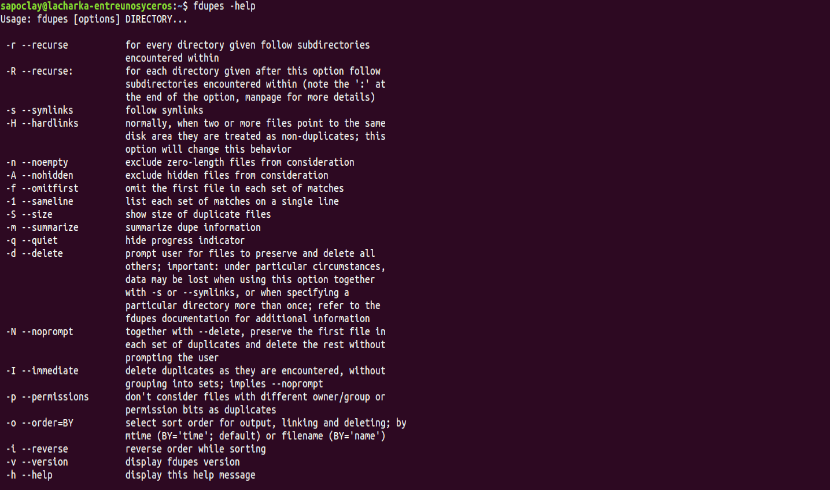

It is similar to the Rdfind utility, but Fdupes comes with quite a few options to perform operations, such as:

- Recursively search for duplicate files in directories and subdirectories.

- Exclude empty files and hidden files from consideration.

- Show the size of duplicates.

- And many more.

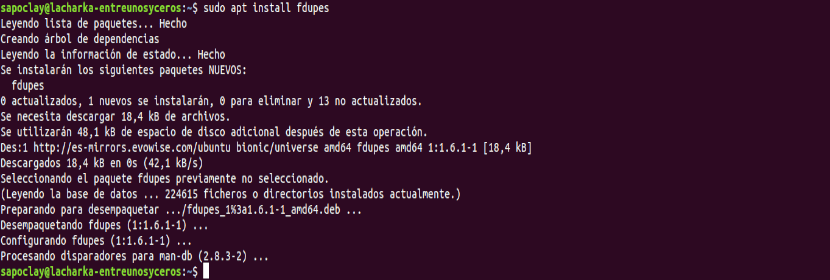

Fdupes installation

We open a terminal (Ctrl + Alt + T) and write:

sudo apt install fdupes

Use

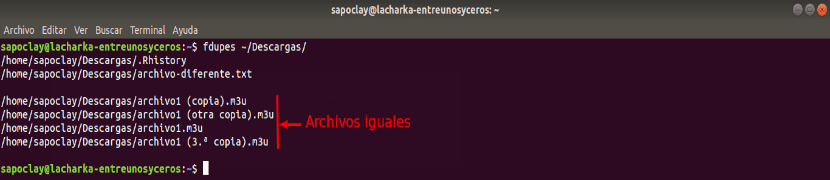

Using Fdupes is quite simple. Just run the following command to find the duplicate files in a directory, for example ~ / Downloads.

fdupes ~/Descargas

We can also search for duplicate files from subdirectories, simply using the -r option.

For remove all duplicates, the option to use will be -d.

fdupes -d ~/Descargas

This command will allow us to select to preserve the original and eliminate all other duplicate files. Here you have to be careful. We can easily delete original files if we are not careful.

To get more information on how to use fdupes, see the help section or the man pages:

fdupes –help man fdupes

FSlint

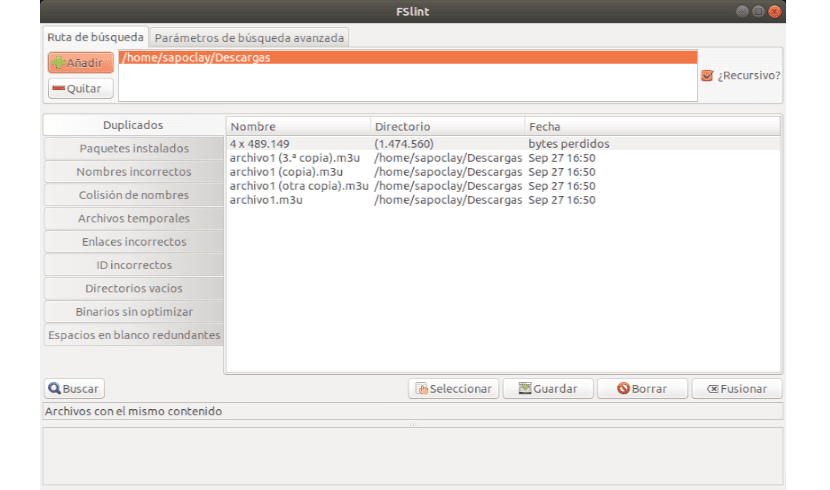

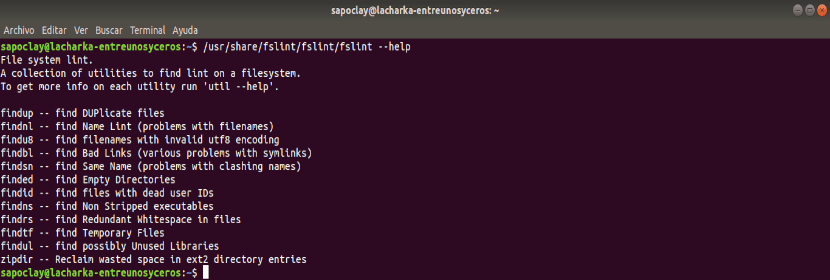

FSlint is another utility to find duplicate files that I found in Github. Unlike the other two utilities, FSlint has both GUI and CLI modes. Therefore, it is an easier tool to use.

FSlint finds not only the duplicates, but also the symbolic links, wrong names, temporary files, wrong IDS, empty directories, and undeleted binaries, etc.

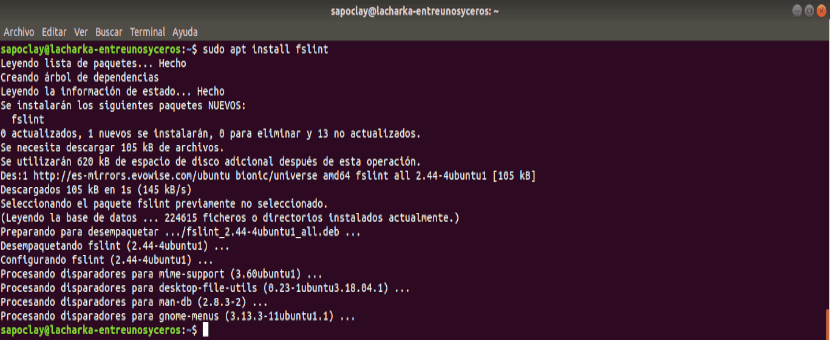

Install Fslint

We open a terminal (Ctrl + Alt + T) and write:

sudo apt install fslint

Use

Once it's installed, we can run it from application menu.

As you can see, the FSlint interface is easy to use and self explanatory. In the tab Search path, we will add the route we want to scan. We will only have to click on the Search button to search for duplicates. Check the option "Recursive?" to recursively search for duplicates in directories and subdirectories. FSlint will quickly scan the given directory and list them.

Of the list, choose the duplicates you want to clean. You can work with any of them with actions such as Save, Delete, Merge and Symbolic Link. In the Advanced Search Parameters tab, you can specify the paths to exclude while searching for duplicates.

To get more details about FSlint, see the help section and the man pages.

/usr/share/fslint/fslint/fslint --help man fslint

These are just three effective tools for finding and removing unwanted duplicate files on Gnu / Linux.

Maybe you missed mentioning duff. Thanks.

Very good contribution! Thanks a lot!

Thank you for the simplicity and detail of your contribution, which has solved the problem for me. Thank you again!! Greetings,

FSLINT, in version 20.04 does not exist. is there any way i can install it.

Thank you

Spectacular rdfind. I tested it on Xubuntu 18-04 and it worked great!