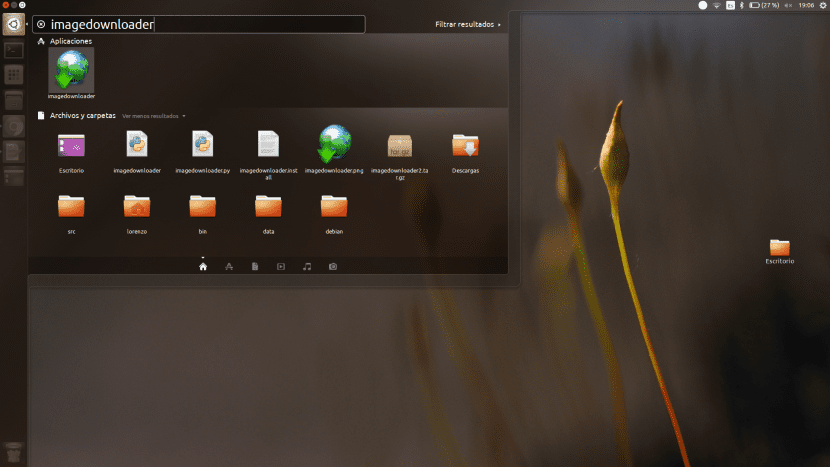

Today Ubunlog We want to talk to you about a program that the guys from Busy. This is a program called Picture Downloader, which, as the name indicates, serves to download images from a web page.

Until recently the program was managed through the command line, but now it has a graphical interface that makes things much easier for the user, especially those newer to the world of Linux. In Ubunlog We want to do a small review of the program and show you how we can use it. We begin.

The first step is download it. To do this, as always, we must first add the necessary repositories from where we are going to download the application (in this case it will be the Atareao repositories), and then proceed with the download. That is to say:

sudo add-apt-repository ppa: atareao / atareao

sudo apt-get update

sudo apt-get install image downloader

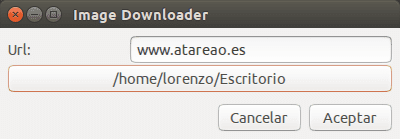

Once we have downloaded the program, we can see how its use is really simple. We simply have to enter the link where we want to download the images, select the directory where we want them to be saved, and proceed to download by clicking the OK button. As we can see in the following image:

For the most curious, the life of this program began ago four years and, as we told you before, previously this program was executed directly from the terminal, since it is actually a bash script what does the program use wget, which is used to download files from a web page. Little by little, the errors that users have found have been corrected, until they reach the state in which they are now. Besides that, as we can see, the program already has an easy-to-use graphical interface. Among other bugs that have been fixed, these are the most notable:

- Detection of images in the code (use of capital letters and single quotes)

- Prevent the same image from being downloaded multiple times

- Prevent an image from overwriting an already downloaded one

- Download both the thumbnail and the original image

In addition, the discharge system has been improved. Now the downloads are performed asynchronously, through the use of several threads, which are set at a total of 10. This means that we can perform up to a total of 10 downloads simultaneously. As the program developer himself indicates, in a supposed next version, the maximum number of simultaneous downloads may be chosen by the user, although if it is a very high number, the web page from which we are downloading the images could be to collapse.

In short, we hope that from now on you can download images from websites easily and fully automatically. If you have any questions, leave it in the comments section.

that's what the jdownloader is for too

I use wget and if the web does not give a link then I use "inspect element" and I get it ... how lazy.