In the next article we are going to take a look at Wget. It must be said that GNU Wget is a free tool that allows the download of content from web servers in a simple and fast way. Its name derives from World Wide Web (w) and the word get (in English get). This name comes to mean: get from WWW.

Today there are dozens of applications to download files very efficiently. Most of them are based on web and desktop interfaces, and are developed for all operating systems. However on Gnu / Linux (there is also a version for Windows) there is the powerful download manager of wget files. It is considered the most powerful downloader that exists. Supports protocols such as http, https and ftp.

Download files with wget

Download a file

The simplest way to use this tool is to download indicating the file What we want:

wget http://sitioweb.com/programa.tar.gz

Download using different protocols

As a good download manager, it is possible request more than one download at a time. We can even use different protocols in the same order:

wget http://sitioweb.com/programa.tar.gz ftp://otrositio.com/descargas/videos/archivo-video.mpg

Download by extension

Another way to download multiple files that use the same extension, it will be using the wildcard asterisk:

wget<code class="language-bash" data-lang="bash">-r -A.pdf</code>http://sitioweb.com/*.pdf

This command does not always work, as some servers may have blocked access to wget.

Download a file listing

If what we want is to download the files that we find, we will only have to save their URL in a file. We will create a list called files.txt and we will indicate the name of the list to the command. Necessary place only one url per line inside files.txt.

The command that we will use to download the list created and that we save in .txt files will be the following:

wget -i archivos.txt

Restart a download

If for whatever reason the download was interrupted, we will be able to continue the download from where it left off using the option c with the wget command:

wget -i -c archivos.txt

Add a log about the download

If we want to obtain a log about the download, in order to control any incident on it, we will have to add the -o option as it's shown in the following:

wget -o reporte.txt http://ejemplo.com/programa.tar.gz

Limit download bandwidth

In very long downloads we can limit download bandwidth. With this we will prevent the download from taking up all the bandwidth for the duration of the download:

wget -o /reporte.log --limit-rate=50k ftp://ftp.centos.org/download/centos5-dvd.iso

Download with username and password

If we want to download from a site where a username / password is required, we will only have to use these options:

wget --http-user=admin --http-password=12345 http://ejemplo.com/archivo.mp3

Download attempts

Default, this program makes 20 attempts to establish the connection and start the download, in very saturated sites it is possible that even with 20 attempts it was not achieved. With the option t increases to more attempts.

wget -t 50 http://ejemplo.com/pelicula.mpg

Download a website with wget

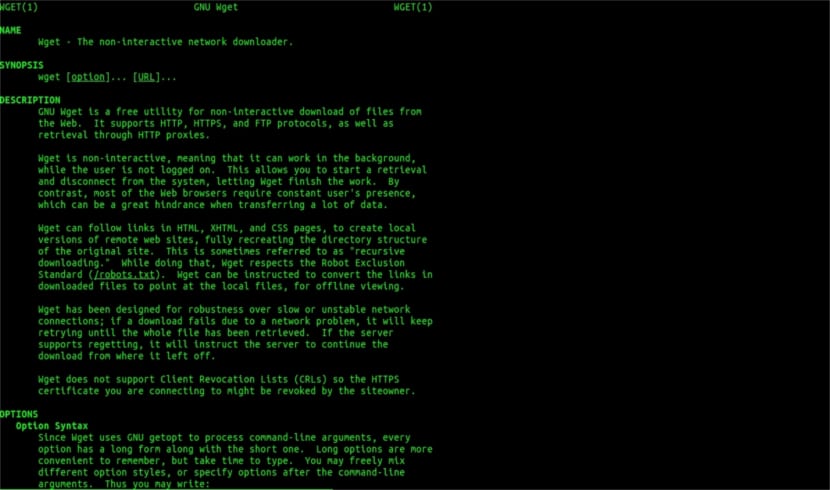

Wget man help

Wget is not limited to just file downloadsWe will be able to download a full page. We will just have to write something like:

wget www.ejemplo.com

Download a website and its extra elements

With the option p we will also download all extra elements needed on the page such as style sheets, inline images, etc.

If we add the option r se will download recursively up to 5 levels from the site:

wget -r www.ejemplo.com -o reporte.log

Convert links to locals

By default, the links within the site point to the address of the entire domain. If we download the site recursively and then study it offline, we can use the convert-links option that will turn them into local links:

wget --convert-links -r http://www.sitio.com/

Get a full copy of the site

We will have the possibility to obtain a complete copy of a site. The –mirror option is the same as using the options -r -l inf -N which indicates recursion at an infinite level and obtaining the original timestamp of each downloaded file.

wget --mirror http://www.sitio.com/

Transform extensions

If you download the entire site to view it offline, several downloaded files may not open, due to extensions such as .cgi, .asp, or .php. Then it is possible to indicate with the –html-extension option All files are converted to an .html extension.

wget --mirror --convert-links --html-extension http://www.ejemplo.com

These are just general guidelines than you can do with Wget. Whoever wants to can consult the online manual to consult all the possibilities that this wonderful download manager offers us.

As for "Download by extension" I have stopped reading. You can't download what you don't know. Unless the requested directory allows the listing of files and lacks an index (and both must occur at the same time), what you say cannot be done. What a level.

Hello Rubén, ignorance is a bit daring.

What you comment can be done with a simple command to google:

filetype:pdf site:ubunlog.com

In this example there is no pdf in this blog, but change the domain at the end to the web you prefer and you will see how easy it is to see all the files of one type of a web.

Have a nice day.

But wget does not connect to google to find the pdfs that are in a url. The web directory must be open and there must be an index page generated by mod_autoindex or similar, as Rubén Cardenal says.

"This command does not always work, as some servers may have blocked access to wget."

This amendment that was placed on this article, because I do not agree with it (although technically it is possible to block certain web agents for http header requests and return a 403 "not allowed" message) and I will explain why:

All Apache web servers (and I'm talking about a considerable percentage of servers) by default allow globbing (excellent Wikipedia article, read: https://es.wikipedia.org/wiki/Glob_(inform%C3%A1tica) .

This in practice means, as specified by mr. Rubén (and he is right), THAT IF THERE IS NO FILE CALLED "index.php" or "index.html" (or even simply called "index") the server will quietly return a list of files and directories (of course in form of an html page with the information as a web link for each file). MOST WEB SERVERS DISABLE THIS FEATURE THROUGH THE .htacces FILE (strictly speaking Apache2) FOR SECURITY REASONS.

Here's the versatility of wget (see its story, again on Wikipedia, the one you know the most: https://es.wikipedia.org/wiki/GNU_Wget ) to analyze or "parsing" such information and extract only the extensions that we request.

Now, in the event that this does not work, for one reason or another, we can try other advanced wget functions, I quote directly in English:

You want to download all the GIFs from a directory on an HTTP server. You tried 'wget http://www.example.com/dir/*.gif’, but that didn't work because HTTP retrieval does not support GLOBBING (I put the capital letters). In that case, use:

wget -r -l1 --no-parent -A.gif http://www.example.com/dir/

More verbose, but the effect is the same. '-r -l1' means to retrieve recursively (see Recursive Download), with maximum depth of 1. '–no-parent' means that references to the parent directory are ignored (see Directory-Based Limits), and '-A. gif 'means to download only the GIF files. '-A «* .gif»' would have worked too.

IF IT IS RUN IN THIS LAST WAY wget will create a folder for us with the requested web address in the default folder where we are working, and it will make subdirectories if necessary and there it will place, for example, the .gif images that we request.

--------

HOWEVER if it is still not possible to obtain only certain kinds of files (* .jpg, for example) we will have to use the parameter «–page-requisites» which downloads all the internal elements of an html page (images, sounds, css, etc) together with the html page itself ("–page-requisites" can be abbreviated "-p") and that would be the equivalent of downloading something like an "mhtml" https://tools.ietf.org/html/rfc2557

I hope this information is useful to you.

Thanks for the notes. Salu2.

I think you have an error, the first two lines have the same command.

Thank you very much, very good tutorial!